I’ve often found cause to complain at the slow uptake of the latest serevr technologies when working on different projects.

I take it all back…

9 hours into re-compiling Apache and php on Mac os X server.

I’ve often found cause to complain at the slow uptake of the latest serevr technologies when working on different projects.

I take it all back…

9 hours into re-compiling Apache and php on Mac os X server.

What does matter is the way the sites link to you and how often new links show up to your site.

If you wake up tomorrow and, overnight, 1 million other sites decided to link to you, that would not affect your position in any way (what it may do is hurt your listing because it will look like spam).

Here is how links work for you:

No I’m not talking 80’s street.

You may be able to get your site listed in the top ten within 30 – 60 days, but that’s no guarantee it will stay there.

As per my previous example of pizzajim they were at Number one because we put them there but as the updates are few and far between (mostly new menus) the site has started to become a bit stale.

If your site is not continually being updated with fresh content and growing, then the search engines will simply dump it

in favor of newer fresher sites. You should be adding a new page to your site every time the crawler visits and updating your old content as much as you can. Daily is preferred, but weekly at the very least. This will mean that the crawler will have fresh content to index and new links to explore. Thus, it will never see your site as stale and drop it.

The major search engines, i.e. Yahoo, Google and MSN, will all say the same thing on their web masters pages and that is “some web crawlers have difficulty reading the context of these pages”.

What it doesn’t say is don’t use flash or framed pages because this is a no-no. The reason why I want to make that clear is a lot of SEO’s will try and tell you that this is a bad thing and that all your pages need to be flat static pages with lots of alt text behind your flat images. This is not the case.

We have gotten many websites in the top ten and number one spots, some of which use both flash and framed pages. Case in point search “pizza barton” in google and out of the more than three quarters of a million results our site pizzajim.co.uk comes number three (it’s slipped from number one admittedly)

Some do not even have any indexable text or links on the home page. So how do we do it? Simple and its something you should be doing anyway. You should have an alternative version of your site which viewers with flash installed will be directed to. For a framed site, you should also have a non-framed version for people whose browsers don’t support framed pages. Now that you know how simple it is, here is the temptation. Because your index page will most likely only be seen by maybe 10% of the people that come to your site, the temptation will be to flood that page with keywords and search text to get yourself bumped up the search and that will happen. However, when you get caught (which you will), you will be dumped. This page should only contain a stripped down version of your site and should only contain the same text content as your flash or framed site.

Twitter and nofollow is the topic of this week’s Twitter column: While even Google who has introduced, along with other search engines, the nofollow attribute to combat spam a few years ago does not propagate the use of it anymore, Twitter went nofollow big time just recently. What does this mean?

Twitter will not improve traffic to your site other than twist reading your tweets.

Not only outgoing links get the so called link condom so that search engines ignore them. No, now also internal links from your tweets on Twitter get wasted.

While you might argue that you don’t tweet to get links or for the SEO of it this means that everything you say gets treated like spam.

Andy Beard, a well known figure in the SEO industry, has even quit Twitter due to their new disdain for their users. Now many people don’t know Andy Beard, he’s not Danny Sullivan or John Battalle but his voice gets heard on the marketing community. Also he’s an early adopter. This means he also knows when to leave a site and seek alternatives. He’s quite active on Google Buzz right now instead.

Andy points out that the nofollow attribute and other hurdles lead to very poor indexing and searchability of tweets. In other words: Your tweets get lost very quickly. In order to archive your tweets and relationships you basically need a third party tool or rather more than one. I don’t know one single tool that is able to both save all of my tweets and those of others who address me and make them all easily searchable while keeping an up to date list of my friends.

I archive my own tweets using RSS readers. I simply subscribe to them. A backup of my relationships is not that easy though. I haven’t found a perfect social CRM tool for that. Most tools that add a social CRM layer to Twitter depend on the data from Twitter itself. So in case you want to discontinue your Twitter account like Andy Beard did you lose your relationships. In case they don’t rely on Twitter you have to add your contacts manually which means more effort and work.

Twitter seems to obstruct indexing of tweets on purpose. Pagerank sculpting by using nofollow does not work anymore these days so

Twitter does not use nofollow for SEO reasons.

They either assume that all user generated content is potential spam or they want to sell searchability in future. They might want to charge for archives and search of older tweets. I don’t like this idea of selling your content and relationships back to you. It’s time to re-evaluate the way we use Twitter.

Twitter has stopped access to its data for many free tools out there. Now they limit the access of search engines to the content. What comes next? Follow 100 people for free and pay for more? Just in case, follow me on Google Buzz as well.

When writing the copy for your site, write it for your viewer and not for the serach engines.

A lot of people get tempted to use the same word or phrase over and over to try and get themselves placed first on an web search. This is a no no move and can also get you bumped for what’s called keyword flooding. Here are some examples:

Pizza jim is located in Barton upon Humber Nortlincolnshire, Here at piiza jim we specialize in pizzas, pizza calzone, pizza stuufed crust, pizza pie. In barton Upon Humber we feel we are the best available in barton and so strive to give the people of barton the best possible service.

Pizza Jim is located in barton upon Humber North liuncolnshire, as well as Pizzas we also serve top quality kebabs and sundries, We always go above and beyond for all of our customers, etc……..

I’m sure you notice the difference. pizzajim.co.uk site does show up in the top ten for the search term “Pizza Barton upon Humber“. However, that was before we wrote this article. So how many times can you include the same search term? That only the Search engine companies know for sure. However, based our experience with sites we have successfully placed in the top ten, we would say no more than once every two paragraphs or once every 100 words.

Style sheets are one of those things that are easy to abuse.

When used correctly, they can really help you when optimizing a site. To abuse the use of style sheets is simple.

All you have to do is make your H1 tag the same as your normal text style to try and make the crawler (search engine software) think that all your text is important.

The trouble is crawlers are better than they used to be.

They figured out that nobody has a header that lasts 8 paragraphs. The proper use of the H1 tag is your main page header. For example, this page is “Peter Bending”. Sub headers like “A touch of style and class” should use the H2 tag and your content should always be normal text.

Never, no matter how tempting it is don’t make any text hidden by use of style sheets or any other method because your site will be bumped. Also do not make the folder that your style sheet is in hidden or private because, if the web crawler can’t read your style sheet, it’s not going to trust that everything is legitimate.

If you have downloaded Google’s toolbar, you will see a bar at the top of each page which gives you a rating from 1 to 10 of how Google ranks your site.

PageRank reflects our view of the importance of web pages by considering more than 500 million variables and 2 billion terms. Pages that we believe are important pages receive a higher PageRank and are more likely to appear at the top of the search results.PageRank also considers the importance of each page that casts a vote, as votes from some pages are considered to have greater value, thus giving the linked page greater value. We have always taken a pragmatic approach to help improve search quality and create useful products, and our technology uses the collective intelligence of the web to determine a page’s importance.

Some search engine companies think that getting a high page rank means you are going to be listed higher in the search. This is not the case. In fact, it couldn’t be further from the truth. What this little bar indicates is simply how important Google sees your site and it makes that judgment on the quality of sites that link to you.

The single most important thing about your site is not page rank, it is not how many incoming links you have

and it is not how long your site has been up.

It is your content, plain and simple.

If your content matches the search criteria, then you will be listed. The more relevant content you have, the higher your site will be listed.

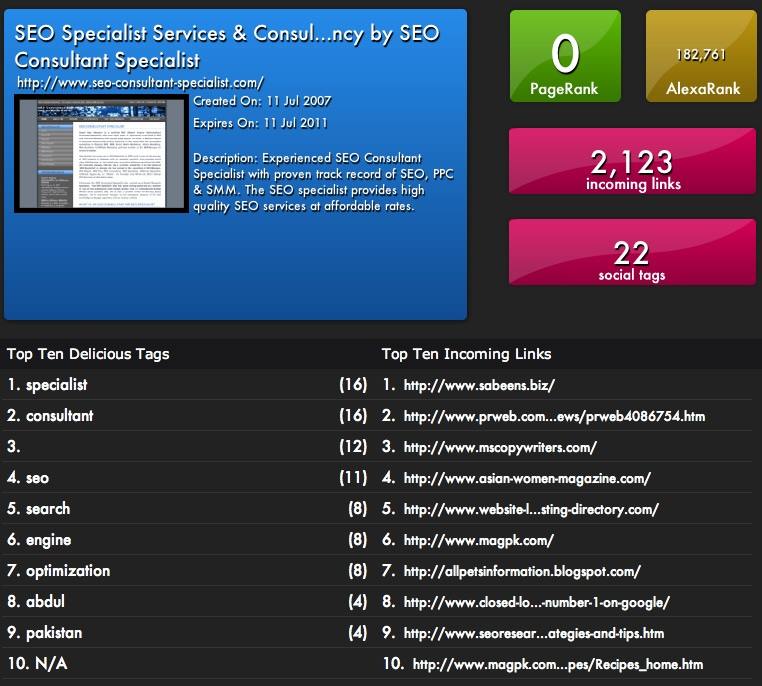

https://www.seo-consultant-specialist.com/ is as I’m writing No1 for the term “seo specialist”